Improving Human Legibility in Collaborative Robot Tasks through Augmented Reality and Workspace Preparation

Yi-Shiuan Tung, Matthew B. Luebbers, Alessandro Roncone, and Bradley Hayes

Abstract

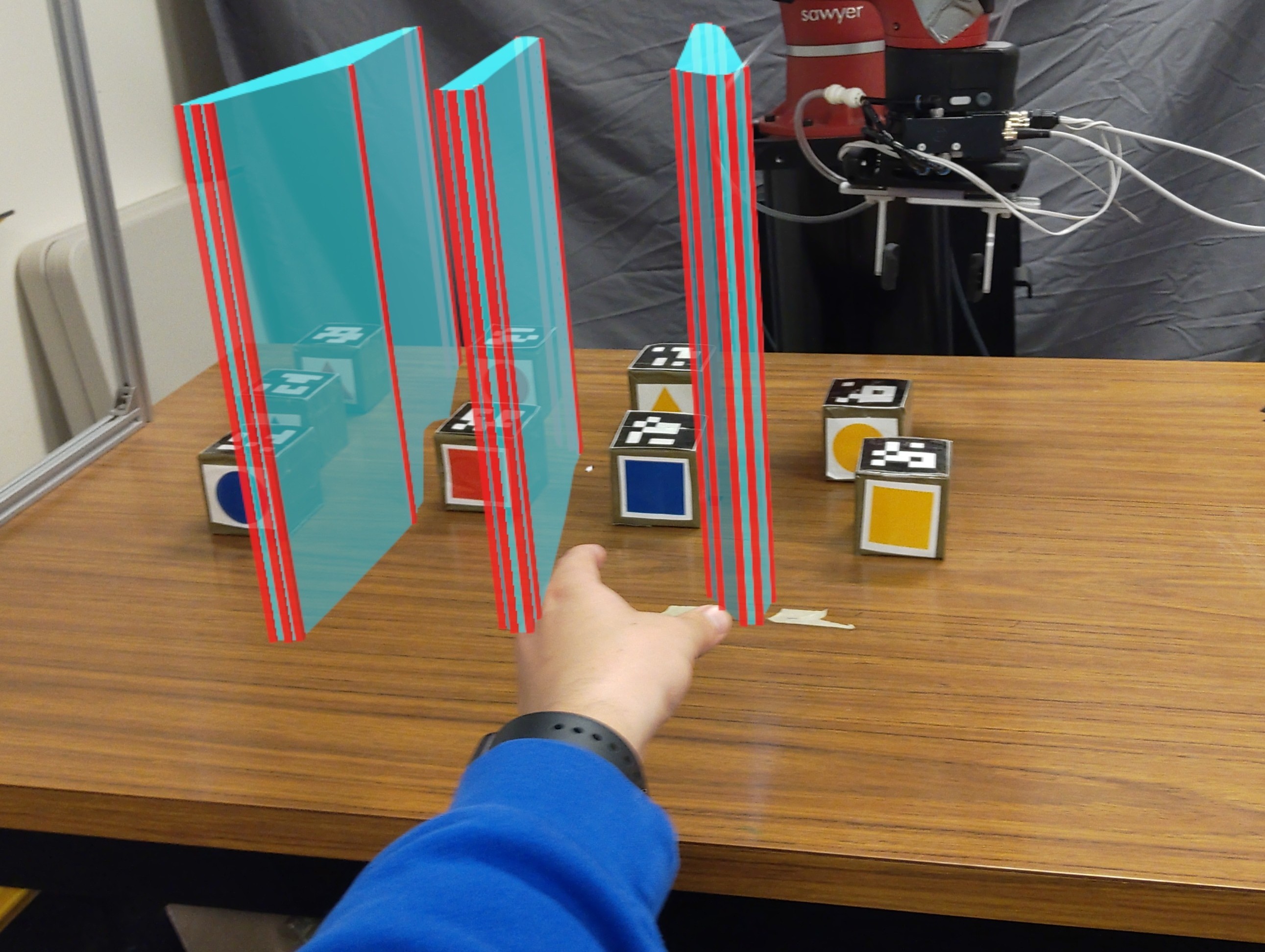

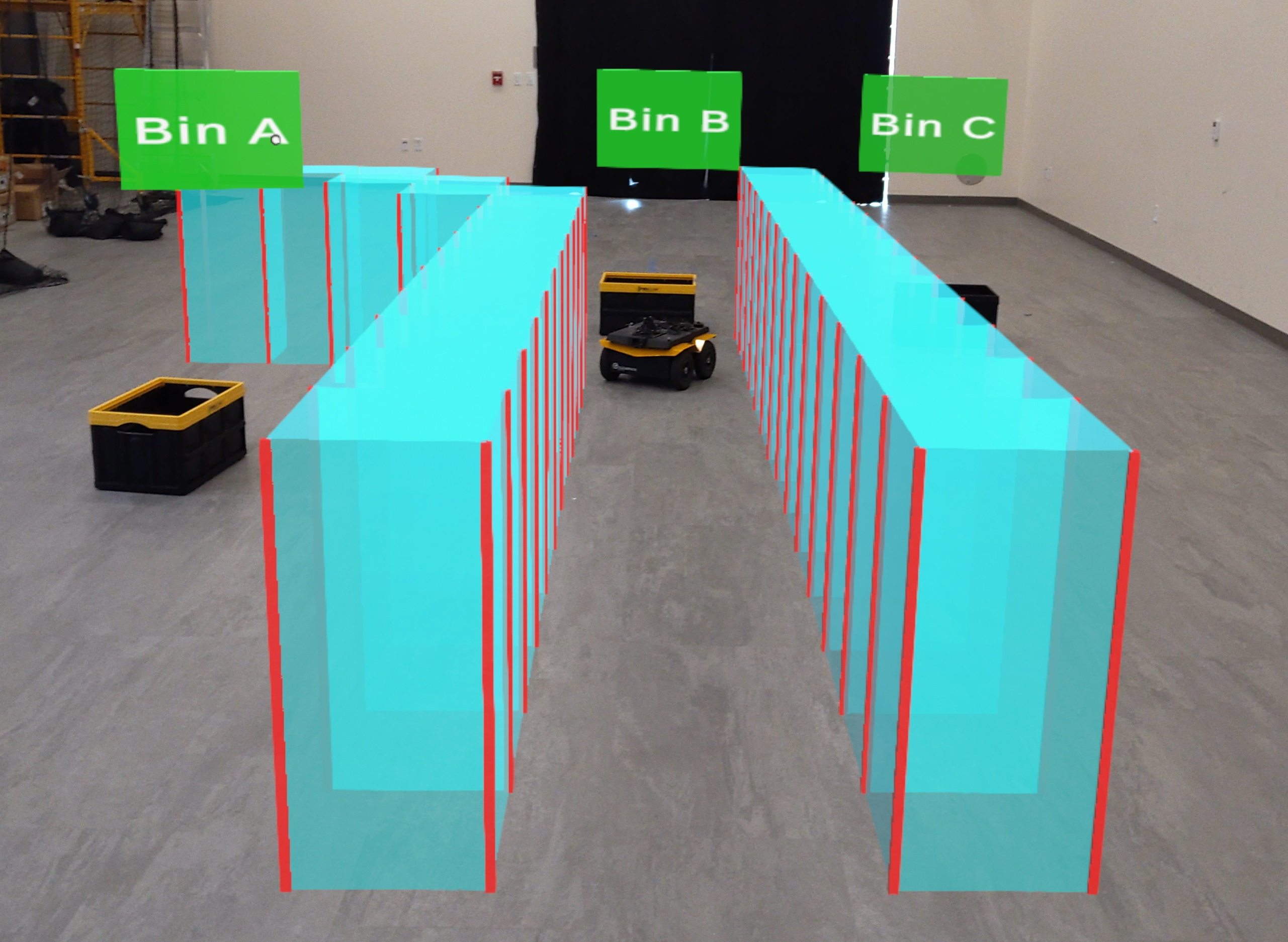

Understanding the intentions of human teammates is critical for safe and effective human-robot interaction. The canonical approach for human-aware robot motion planning is to first predict the human's goal or path, and then construct a robot plan that avoids collision with the human. This method can generate unsafe interactions if the human model and subsequent predictions are inaccurate. In this work, we present an algorithmic approach for both arranging the configuration of objects in a shared human-robot workspace, and projecting virtual obstacles in augmented reality, optimizing for legibility in a given task. These changes to the workspace result in more legible human behavior, improving robot predictions of human goals, thereby improving task fluency and safety. To evaluate our approach, we propose two user studies involving a collaborative tabletop task with a manipulator robot, and a warehouse navigation task with a mobile robot.

The paper can be accessed here, and from our Publications tab.